A fundamental aspect of computer systems involves the representation and handling of textual data. Within the American Standard Code for Information Interchange (ASCII), a defined subset facilitates human-readable communication. This subset encompasses characters specifically designed to be displayed or printed, enabling clear interactions between users and machines. These include uppercase and lowercase letters of the English alphabet, the ten decimal digits, common punctuation marks, and the space character. For instance, the letter ‘A’ is represented by the decimal value 65, the digit ‘0’ corresponds to 48, and a space is represented by 32. These numerical representations allow computers to consistently interpret and display these characters, ensuring uniformity across different systems and applications. Without this standardized range, transmitting textual information between devices would be prone to errors and misinterpretations, hindering effective communication. This standard forms the bedrock upon which much of modern digital communication is built.

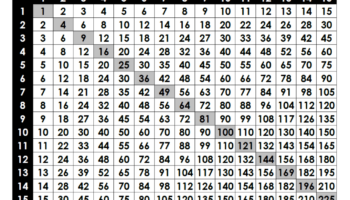

The utility of this human-readable character subset extends far beyond simple display. It is pivotal in data storage, encoding schemes, and network protocols. Many file formats, particularly plain text files, rely on this standardized character set for representing textual content. Encoding schemes, like UTF-8, often incorporate or extend the existing ASCII standard to handle a broader range of characters from various languages. In networking, protocols frequently utilize this character set for transmitting commands, responses, and data payloads between client and server applications. The ubiquity of this character encoding has fostered interoperability, allowing systems developed by different entities to communicate effectively. Furthermore, the limited number of characters in this subset (ranging from decimal 32 to 126) simplifies processing requirements, contributing to efficient data handling and faster communication speeds. This efficiency is particularly important in resource-constrained environments, such as embedded systems or low-bandwidth networks.

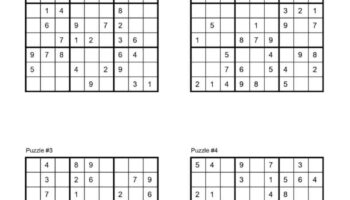

Understanding this standardized character set opens doors to comprehending more complex encoding schemes and data representation techniques. It also allows for the deeper insight on data validation techniques, ensuring that input data conforms to expected formats and preventing unexpected behavior. For example, when processing user input in a web application, verifying that input contains only valid symbols helps safeguard against potential security vulnerabilities. The knowledge facilitates troubleshooting data corruption issues, identifying where and why textual data might have been misinterpreted during transmission or storage. In essence, a solid grasp of the defined characters and their representations empowers individuals to better understand, analyze, and manipulate textual information within computer systems. The subsequent discussion will explore applications of this character set in programming.